Emma: After the Green Bar

By Michael Easter, OCI Senior Software Engineer

February 2007

Introduction

Code coverage improves software quality by illumination: it shines light on executed code, and reveals dark corners that are untested or never used. This software metric can enhance many projects, from standard business apps to those with ultra-low tolerance for error (e.g. a medical device). Historically, however, code coverage measurement is either: (a) applied wholesale to the entire project, usually late in the development cycle, or (b) dropped entirely on projects without formal, regulated domains.

With the rise of agile methodologies such as Test-Driven Development (TDD) and landmark tools like JUnit, code coverage is gaining new traction. There are now coverage tools that strive for two goals: to simplify generation of project-wide statistics, and to establish code coverage as an integrated, "next step" in the iterative process of agile development.

This article examines one such tool, Emma, and illustrates both the agile and project-wide aspects of modern, post-JUnit code coverage using a simple Java example.

Overview

Emma is an open-source toolkit for measuring and reporting code coverage statistics of Java apps. It has a liberal license (the Common Public License), a myriad of reporting options, and strives for speed and a small footprint on the Java project.

Emma operates in 3 steps:

1. Instrumentation

Emma uses bytecode enhancement to instrument Java class files for measurement. Emma neither modifies nor requires Java source code (an appealing feature), and has no external runtime dependencies. Emma works quickly, and claims to be faster than coverage tools based on the JVM Profiler/Debug Interfaces. Using filters, one can target specific areas of the code base, which is key to the agile aspect.

2. Measurement

A "driver" program exercises the instrumented Java classes, and the Emma hooks generate raw statistics. Emma is agnostic with respect to the type of driver: it can be a JUnit test, a web server or a simple main() method.

3. Reporting

Emma consolidates the data collected during measurement and generates reports in the usual formats (HTML, XML, etc). Reports provide drill-down functionality, and link to the source code where available.

Modes

There are two types of instrumentation: on-the-fly and offline. These types offer two modes of usage.

1. On-The-Fly

In this simple mode, Emma acts as an application runner. In a single step, Emma uses a custom class loader to instrument the classes in memory, execute the code (with measurement), and generate the report. This mode is useful both for beginners and for experimentation with Emma's options. One can also quickly examine a small area of code. The philosophy is analogous to an interactive IDE window for a scripting language.

2. Offline

In this industrial-strength mode, there are separate steps for each of the instrument, measurement, and report phases. First, Emma instruments classes to disk in a "post compilation" step. These classes execute as normal in a "run step", with the standard JVM and class loaders -- the only difference is that Emma's hooks covertly write measurement data to disk. A final step generates the report from the data. This mode is mandatory for environments with sophisticated class loading, such as J2EE web/app servers, and is the best fit for production build environments.

Coverage Units

Two primary methods for determining code coverage are line coverage and path coverage. Line coverage answers "How many lines have been executed?". Path coverage answers "How many paths of execution have been exercised?". Like many tools, Emma doesn't provide path coverage (a difficult theoretical problem), but instead concentrates on line coverage. However, Emma's approach is clever and has unique advantages.

In Emma, the fundamental unit of coverage is the basic block, defined as a sequence of bytecode instructions that does not contain jump-related code. For example:

- if( x > 2 ) {

- // a basic block

- int y = x * 2;

- System.out.println("y = " + y);

- } else {

- // another basic block

- int z = x + 15;

- int y = z * 100;

- System.out.println("y = " + y + " ; z = " + z);

- }

Essentially, Emma marks the beginning and end of basic blocks, and tracks these "book-ends" during measurement (by definition, basic blocks are atomic, in the absence of exceptions). This technique is the key to Emma's indifference to the source code. To infer line coverage, Emma maps information about basic blocks to source information (contained in the Java class).

It isn't obvious, but a line of code can contain multiple basic blocks:

// contains jump-related bytecode and so has multiple basic blocks

boolean isGreater = ( y > 10 ) ? true : false; With the basic block approach, Emma can report fractional line coverage, where some (but not all) of the line's constituent blocks have been executed.

Example 1: TDD

Our first example with Emma uses on-the-fly mode in a TDD setting.

Though a full exposition is outside the scope of this article, TDD is an agile development methodology that relies heavily on an integrated testing framework; JUnit is a popular choice. TDD mandates that we write tests before writing any new code, and drives software with the following cycle:

- Write a test for new functionality. Ensure that it fails (represented by a red bar in JUnit)

- Write new code so that the test passes (represented by a green bar in JUnit)

- Expand functionality by repeating with new tests. Use imagination/dialogue to inspire these new tests.

Proponents of TDD with JUnit often use "red" and "green" as synonyms for "fail" and "pass", respectively. The above cycle is often called "red-green testing".

Our assignment: use TDD to develop a Java class that evaluates a 3-card poker hand, according to the hand rankings (available here) which are possible for three cards. Bonus points are awarded for a solution which scans the cards only once. Fragments will be shown below. The full source is available here. See Example Notes below for system requirements.

Red Bar

In accordance with TDD, we first develop a test case (for a "pair") using JUnit:

- public void testIsPair_Pair() {

- pokerHand = buildHand( Card.TWO, Card.CLUBS,

- Card.TWO, Card.HEARTS,

- Card.NINE, Card.SPADES );

- PokerHand.Hand result = pokerHand.evaluate();

- assertEquals( PokerHand.Hand.PAIR, result );

- }

Since we don't have an evaluate() method, the test will certainly fail.

Green Bar

Following the cycle, we develop the code to go "green":

- public Hand evaluate() {

- Hand result = null;

-

- isPair = false;

-

- Iterator iterator = cards.iterator();

- Card previousCard = (Card) iterator.next();

-

- while( iterator.hasNext() ) {

- Card thisCard = (Card) iterator.next();

-

- if( previousCard.equalsRank(thisCard) ) {

-

- if( !isPair ) {

- // one pair encountered

- isPair = true;

- } else {

- // we have already seen one pair, so this 2nd pair => 3 of a kind

- isPair = false;

- }

- }

-

- previousCard = thisCard;

- }

-

- result = (isPair) ? Hand.PAIR : Hand.HIGH_CARD;

-

- return result;

- }

(Note: keen readers will see that the code does slightly more than identify a pair (it discounts a 3-of-a-kind). Purists insist that the code should only satisfy the test (and no more). However, this is a simple example for illustration. The ideas in this article apply to pure TDD both for more sophisticated red-green testing and also refactoring.)

After the Green Bar

At this point, the test passes. We might move on to write a counter test: e.g. passing a 3-of-a-kind into the class to ensure it fails. However, this is precisely where Emma adds value in the TDD regimen, by "spying" on the current code via coverage. Using the on-the-fly mode, we re-run the unit test through Emma:

- java -cp emma.jar emmarun -r html -sourcepath . -cp .;junit.jar \

- junit.textui.TestRunner com.ociweb.emma.ex1.PokerHandTestCase

This command packs a lot into a single step. The JVM runs emmarun (Emma's application runner), which in turn runs JUnit (which runs the test). The report is in html (-r html) with links to the source code (-sourcepath .).

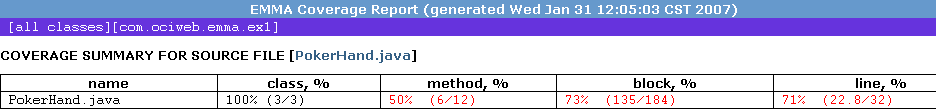

The initial report reveals that only 73% of the blocks were covered:

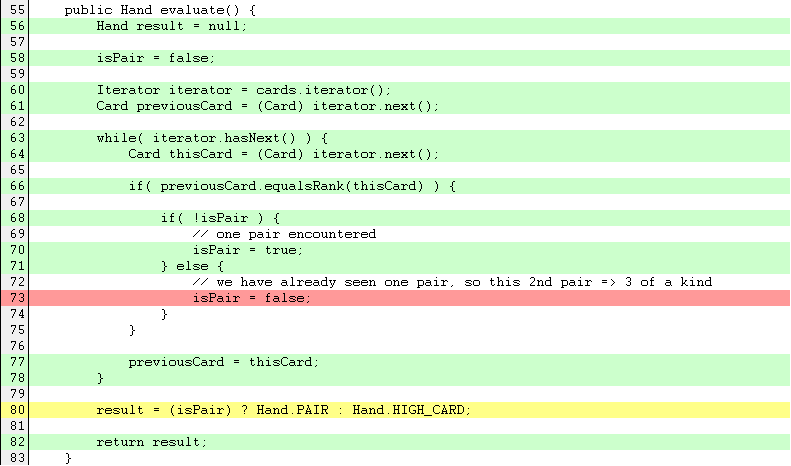

With the link to the source, Emma provides a colorful code listing:

The green lines were covered by the test. The yellow line is an example of fractional line coverage, as described earlier. It confirms that we have only tested the "happy path" for a pair.

The red line is of particular interest: this code has not been executed. On examination, it is apparent that the first test passes because the hand is of the form { X, X, Y }. This implies a new test of the form { X, Y, X }:

- public void testIsPair_UnsortedPair() {

- pokerHand = buildHand( Card.TWO, Card.CLUBS,

- Card.THREE, Card.HEARTS,

- Card.TWO, Card.SPADES );

- PokerHand.Hand result = pokerHand.evaluate();

- assertEquals( PokerHand.Hand.PAIR, result );

- }

As expected, this test fails. One fix is to sort the cards by rank upon creation of the PokerHandclass (at the risk of forfeiting the bonus points mentioned above).

By iterating this red-green-coverage cycle, Emma inspires two more tests:

- public void testIsPair_HighCard() {

- pokerHand = buildHand( Card.TWO, Card.CLUBS,

- Card.QUEEN, Card.HEARTS,

- Card.SIX, Card.SPADES );

- PokerHand.Hand result = pokerHand.evaluate();

- assertEquals( PokerHand.Hand.HIGH_CARD, result );

- }

-

- public void testIsPair_ThreeOfKind() {

- pokerHand = buildHand( Card.TWO, Card.CLUBS,

- Card.TWO, Card.HEARTS,

- Card.TWO, Card.SPADES );

- PokerHand.Hand result = pokerHand.evaluate();

- assertFalse( PokerHand.Hand.PAIR == result );

- }

At this point, the evaluate() method is rendered completely green. The new code is availablehere. See Example Notes below for system requirements.

Coverage-Driven Development? Caveat Testor

We see evidence that Emma adds value to the TDD dev-cycle by adding a new "coverage" step after JUnit's green bar. How much value is added? As much as testing? Consider the following:

- After the green bar, TDD relies on imagination and intuition to write further tests.

- Yet as a discipline, TDD does not trust imagination/intuition to write the software. It places tremendous trust in the tests, predicated by the testing framework.

- Given the example above, and that (as we'll see) there are Emma-based tools in popular IDEs, can we place similar faith in code coverage? If the software works only when all of the tests pass, does it work only when it is completely covered as well?

In other words, if TDD drives software with tests, one wonders if code coverage can either (a) drive the tests or (b) give us a new-age "coverage harness" that implies the software is truly complete. Are we seeing the emergence of a new software discipline: Coverage-Driven Development (CDD)?

The answer is: no. Unfortunately, CDD overstates the case for current coverage tools. No doubt: they are useful, but several articles (see "Don't Be Fooled By The Coverage Report" in references) advise caution on the idiosyncrasies of code coverage reports. Here are some caveats:

- Covered code may not be tested well: code can be covered and yet have no assertions. "Covered" is not tantamount to "correct".

- Covered code may contain subtle traps based on the input, particularly with respect to exceptions.

- Tools that perform line coverage can be misleading with respect to conditional statements. (Emma mitigates this with its fractional line coverage.)

The main theme here is a false sense of security with respect to covered code. Code coverage works best to identify un-executed code and to assist the inspiration of new tests. Despite the caveats, though, this assistance is welcome and powerful.

Example 2: Offline Mode with JUnit

We illustrate offline mode with an example of a "project-wide" coverage report (though the project is admittedly small). Example 2 (source available here, see Example Notes below for system requirements) contains a full implementation of the PokerHand class, together with an extensive list of JUnit tests. An Ant build compiles, then instruments, the Java class files, using Emma's ant task:

- <target name="instrument" depends="init, compile" description="offline instrumentation">

- <emma enabled="true">

- <instr instrpathref="run.classpath"

- destdir="${instr.dir}"

- metadatafile="${coverage.dir}/metadata.emma"

- merge="true"></instr>

- </emma>

- </target>

The standard JUnit ant task is used to run the tests:

- <target name="run" depends="instrument" description="run the junit tests" >

- <junit fork="yes" haltonfailure="yes">

- <classpath>

- <pathelement location="${instr.dir}" ></pathelement>

- <path refid="run.classpath" ></path>

- <fileset dir="${lib.dir}">

- <include name="**/*.jar"></include>

- </fileset>

- </classpath>

- <test name="com.ociweb.emma.PokerHandTestCase"></test>

- </junit>

- </target>

Finally, another Emma ant task generates the report:

- <target name="report" depends="run" description="create the Emma report" >

- <emma enabled="true" >

- <report sourcepath="${src.dir}">

- <fileset dir="${coverage.dir}" >

- <include name="*.emma" ></include>

- </fileset>

- <html outfile="${coverage.dir}/coverage.html"

- depth="method"

- columns="name,class,method,block,line"></html>

- </report>

- </emma>

- </target>

The resulting report is similar to the above examples, except that the coverage is dramatically increased.

Example 3: Offline Mode with Tomcat

Tomcat is popular, open-source servlet container. It is a reference implementation for Sun's Java Servlet and JSP technologies. Designed to handle large-scale web applications, it has a complex class loading scheme that serves as an excellent example of Emma's offline instrumentation.

The Tomcat example (source available here, see Example Notes for system requirements) tests the PokerHand class via a simple web app, where an HTML file is used to invoke the PokerHand class via a servlet. The build steps are as follows:

- The Ant build compiles the Java source and instruments the classes.

- The instrumented classes and web resources are bundled into a WAR file.

- Tomcat must have access to the Emma jar. One way is to copy

emma.jarto the$JAVA_HOME/jre/lib/extdirectory, whereJAVA_HOMEis the JVM used by Tomcat. - The user deploys the WAR file, starts Tomcat, and tests via the HTML page.

- When Tomcat is shutdown,

coverage.ecis written to$TOMCAT_HOME/bin. - The above file is copied to the example directory so that the report can be generated.

Again, the resulting report is similar, where the coverage level is a direct reflection of the number of tests explored via the browser.

Emma Usage

Other Features

These examples use Emma in a simple, default manner. Here is a quick tour of some advanced options:

- Most of Emma's functionality is exposed through parallel command-line tools and Ant tasks. Properties may be set via JVM properties, external files and Ant tasks/subtasks with a flexible override behavior.

- As mentioned, Emma can produce reports in HTML, XML and plain text. Other options include: report depth (e.g. package, class or method), column ordering and sort ordering for a given column.

- Emma allows multiple coverage sessions, which are essentially "runs" of the software. These sessions can be merged into a single coverage report.

- Emma defaults to using weighted basic blocks, where the weighting is based on the number of bytecode instructions in a block. This can be turned off for those who desire traditional, unweighted metrics.

IDEs

This article has concentrated on using the original Emma toolkit, but, as noted, other tools leverage Emma to provide similar functionality. For example, code coverage in IntelliJ's IDEA is based on Emma. EclEmma is a plugin for Eclipse, and there is a plugin for Netbeans. Note, however, that these tools are not guaranteed to provide access to all of Emma's underlying options.

Summary

With tools such as Emma, code coverage is no longer an after-thought for only those projects that have the luxury of extra time or the mandate of formal regulation. It is emerging as a key software metric for both project-wide and agile, task-specific environments.

For project-wide statistics, this ease-of-use implies that it can be used on standard projects during the development cycle. For example, a nightly-build can generate code coverage reports based on test suites.

For agile development, Emma enables a newfound niche in test-first methodologies. The application of code coverage, after the green bar, acts as a powerful spotlight, illuminating the effect of the test and inspiring others. Though it is true that coverage reports require cautious interpretation, and that we can't completely trust a "coverage harness", the overall effect is positive and compelling.

With its speed, unbeatable price-per-seat (i.e. free) and generous license, Emma covers its bases, and adds value to any Java project.

Example Notes

The examples in this article use Emma 2.0.5312, Java 1.5.x, Ant 1.6.x, Tomcat 5.5.x and JUnit 3.8.x. It is assumed that Java, Ant, and Tomcat are installed on the machine. Emma, JUnit and the Servlet-API jars are included in the example download for convenience.

Note that Emma claims to work in any J2SE runtime environment, and requires Ant 1.4.1 or higher.

References

- [1] Emma

http://emma.sourceforge.net/ - [2] Don't Be Fooled By The Coverage Report

http://www-128.ibm.com/developerworks/java/library/j-cq01316/ - [3] Code Coverage

http://en.wikipedia.org/wiki/Code_coverage - [4] EclEmma

http://eclemma.org/ - [5] Emma plugin for Netbeans

http://blogs.sun.com/xzajo/entry/emma_code_coverage_for_netbeans

Michael Easter thanks Dean Wette, Tom Wheeler, Dan Troesser, Lance Finney and Mario Aquino for reviewing this article and providing useful suggestions.

Software Engineering Tech Trends (SETT) is a regular publication featuring emerging trends in software engineering.